Computer vision technology is rapidly transforming various industries, from healthcare to autonomous vehicles. This technology uses artificial intelligence to enable computers to “see” and interpret images and videos, mimicking human vision. Understanding its core principles, applications, and challenges is crucial for navigating this evolving field.

This detailed exploration delves into the fundamental concepts, techniques, and applications of computer vision. From image acquisition and preprocessing to object detection and recognition, we’ll unravel the intricate processes behind this powerful technology. We’ll also examine its ethical considerations and future potential.

Introduction to Computer Vision Technology

Computer vision is a field of artificial intelligence that empowers computers to “see” and interpret the visual world. This involves enabling computers to “understand” images and videos, extracting meaningful information, and taking actions based on that understanding. It’s a rapidly evolving field with applications spanning diverse sectors, from autonomous vehicles to medical diagnostics.The core concept revolves around enabling machines to perceive and comprehend the visual environment, similar to how humans do.

This ability to interpret images and videos allows for automation of tasks that previously required human intervention, leading to significant advancements in various fields.

Definition of Computer Vision Technology

Computer vision technology is the ability of computers to “see” and interpret images and videos in a way that humans can. This involves using algorithms and techniques to analyze visual data, extract meaningful information, and make decisions based on that information. It’s a multi-faceted field that combines elements of image processing, machine learning, and artificial intelligence.

Fundamental Principles of Computer Vision

Computer vision relies on several fundamental principles. These include image formation, feature extraction, and object recognition. Image formation describes how light interacts with objects and creates an image. Feature extraction focuses on identifying distinctive characteristics within images that can be used to distinguish different objects or scenes. Object recognition is the ability to identify and categorize objects in an image.

These principles are fundamental to the overall operation of computer vision systems.

Core Components of a Computer Vision System

A computer vision system typically comprises several key components. First, image acquisition captures the visual data. Then, image preprocessing cleans and enhances the raw image data, ensuring optimal performance for subsequent steps. Feature extraction identifies and isolates key features from the processed image. Next, classification or object recognition categorizes the identified features.

Finally, decision-making uses the results of the recognition process to trigger actions.

Historical Overview of Computer Vision’s Development

Early computer vision research focused on low-level image processing tasks. As technology advanced, the field expanded to include higher-level tasks such as object recognition and scene understanding. The early 1960s witnessed the initial exploration of the possibility of enabling computers to “see”. Subsequent decades saw gradual advancements in algorithms and hardware, enabling more complex tasks. The advent of powerful computing and large datasets has spurred significant progress in recent years.

Evolution of Computer Vision Algorithms

Early computer vision algorithms relied heavily on handcrafted features. However, the rise of machine learning has led to significant advancements. Deep learning algorithms, particularly convolutional neural networks (CNNs), have revolutionized computer vision, enabling the development of more accurate and robust models for tasks such as object detection and image classification.

Diagram of Image Processing in Computer Vision

+-----------------+ +-----------------+ +-----------------+

| Image Acquisition | --> | Image Preprocessing| --> | Feature Extraction|

+-----------------+ +-----------------+ +-----------------+

| |

| V

| +-----------------+

+---------------------------------> | Object Recognition|

+-----------------+

|

| Decision Making

|

V

+-----------------+

| Action Output |

+-----------------+

This diagram illustrates the sequence of steps involved in image processing.

Image acquisition captures the raw image data. Preprocessing enhances and prepares the image for feature extraction. Feature extraction identifies key features in the image. Object recognition classifies these features. Finally, the system makes a decision and outputs an action based on the recognition results.

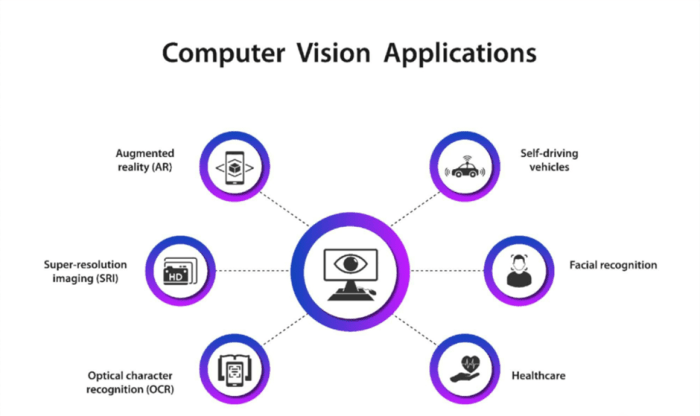

Applications of Computer Vision: Computer Vision Technology

Computer vision, the ability of computers to “see” and interpret images, has rapidly expanded its applications across diverse sectors. From medical diagnoses to self-driving cars, computer vision’s capabilities are transforming industries and daily life. This technology’s capacity to analyze visual data and extract meaningful information is driving innovation in countless areas.

Healthcare Applications

Computer vision is revolutionizing healthcare by automating tasks and improving diagnostic accuracy. Medical imaging analysis is a key application. Systems can automatically detect anomalies in X-rays, CT scans, and MRIs, potentially aiding in early disease detection and treatment planning. Furthermore, computer vision is used in surgical procedures to guide instruments and enhance precision. Skin cancer detection is another significant area of application.

Sophisticated algorithms can analyze skin lesions in images, identifying potentially cancerous areas for prompt medical intervention.

Autonomous Vehicle Applications

Computer vision plays a critical role in autonomous vehicles, enabling them to perceive their environment. Sophisticated algorithms process video feeds from cameras, allowing the vehicle to identify and track objects like pedestrians, vehicles, and traffic signals. This data informs the vehicle’s navigation, enabling safe and efficient autonomous driving. Examples include object recognition for lane keeping, detecting traffic signals for proper response, and pedestrian/bicycle detection for collision avoidance.

Robotics Applications

Computer vision enables robots to interact with and navigate their environment. Robots equipped with computer vision systems can identify and manipulate objects, perform complex tasks, and adapt to changing situations. Industrial robots use computer vision to sort objects, assemble parts, and perform quality control tasks. Service robots can utilize computer vision for navigation and interaction with humans, enabling them to perform tasks like delivering packages or assisting with daily chores.

Security System Applications

Computer vision is transforming security systems by enhancing surveillance capabilities. Systems can automatically detect suspicious activities, identify individuals, and respond to threats. Surveillance cameras equipped with computer vision algorithms can monitor public spaces, identify potential security breaches, and assist in law enforcement. These systems can recognize faces, detect movement, and trigger alerts based on pre-defined parameters.

Agricultural Applications

Computer vision is finding increasingly important uses in modern agriculture. Crop monitoring and yield prediction are significant applications. Systems can analyze images of crops to assess plant health, detect pests or diseases, and estimate yields, thereby optimizing resource management and maximizing crop production. Automated harvesting is also possible. Computer vision can enable robots to identify and harvest crops with precision, minimizing waste and maximizing efficiency.

Table of Computer Vision Applications

| Application | Advantages | Disadvantages |

|---|---|---|

| Healthcare (Medical Imaging Analysis) | Improved diagnostic accuracy, early disease detection, reduced human error, increased efficiency | Requires large datasets for training, potential for misinterpretations, need for ongoing maintenance and updates |

| Autonomous Vehicles | Enhanced safety, improved traffic flow, reduced human error, potential for increased efficiency | Dependence on reliable data, vulnerability to environmental factors, potential for ethical dilemmas |

| Robotics | Enhanced dexterity, improved object manipulation, increased automation, reduced human labor | Cost of implementation, complexity of algorithms, potential for job displacement |

| Security Systems | Improved surveillance, enhanced security, real-time threat detection, reduced response time | Privacy concerns, potential for bias in algorithms, need for robust data security |

| Agriculture | Optimized resource management, maximized crop production, minimized waste, increased efficiency | High initial investment, need for specialized hardware, potential for disruption of traditional farming practices |

Image Acquisition and Preprocessing

Image acquisition and preprocessing are fundamental steps in computer vision. High-quality images are crucial for accurate analysis, and preprocessing techniques significantly impact the performance of subsequent algorithms. These steps prepare the raw image data for effective processing by computer vision systems. Different methods for image acquisition and preprocessing techniques are employed depending on the specific computer vision task.

Image acquisition involves capturing visual data, ranging from simple digital photographs to complex sensor-based recordings. Preprocessing techniques, in turn, aim to enhance the image quality and suitability for analysis, thereby mitigating the effects of undesirable characteristics like noise and variations in lighting conditions. Understanding and applying appropriate preprocessing steps is vital for accurate and reliable computer vision outcomes.

Methods for Acquiring Images

Various methods are employed for acquiring images in computer vision. Digital cameras, a common approach, use sensors to convert light into digital signals. Specialized sensors, such as those used in medical imaging or satellite imagery, capture data tailored to specific applications. These sensors often have different spectral sensitivities, resolution capabilities, and dynamic ranges, impacting the subsequent processing steps.

Scanners, used for capturing documents or 3D models, provide different types of image data. Other techniques include using mobile devices, which have become increasingly prevalent for capturing images for computer vision tasks.

Image Preprocessing Techniques

A wide array of techniques are applied to enhance image quality for computer vision tasks. These techniques can improve image clarity, reduce noise, and adjust contrast, ultimately influencing the performance of subsequent analysis. Common techniques include noise reduction, contrast enhancement, and sharpening. Furthermore, image resizing and cropping are essential steps for adjusting the image’s dimensions and focusing on specific regions of interest.

Importance of Image Quality

Image quality plays a critical role in computer vision tasks. High-quality images lead to more accurate analysis and robust algorithms. Poor image quality, including noise, low resolution, and uneven lighting, can significantly affect the accuracy of object detection, recognition, and other tasks. Consider a scenario where a self-driving car needs to identify a pedestrian. A blurry or noisy image of the pedestrian could lead to incorrect identification or delayed response, potentially jeopardizing safety.

Noise Reduction in Image Preprocessing

Noise reduction is a crucial preprocessing step to mitigate the negative effects of noise in images. Noise can originate from various sources, including sensor imperfections, environmental factors, and transmission errors. Techniques such as median filtering and Gaussian filtering effectively reduce noise while preserving important image details. These techniques help to improve the clarity and reliability of the images, which is critical for many computer vision applications.

Contrast and Sharpness Enhancement

Enhancing contrast and sharpness in images is essential for improving their visual appeal and enabling effective analysis by computer vision algorithms. Contrast enhancement techniques aim to increase the difference between bright and dark regions, making details more visible. Sharpness enhancement techniques increase the transition between different regions, resulting in crisper edges and enhanced visual clarity.

Image Resizing and Cropping

Image resizing and cropping are fundamental preprocessing steps for adapting images to specific computer vision tasks. Resizing alters the dimensions of the image, potentially improving computational efficiency or fitting the image into a particular input format for the algorithm. Cropping isolates a specific region of interest within the image, focusing on the pertinent details for the task at hand.

For example, in object detection, cropping around the target object improves the accuracy of subsequent analysis.

Comparison of Image Preprocessing Methods

| Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| Noise Reduction (Median Filtering) | Reduces random noise by replacing each pixel with the median value of its neighbors. | Effective for salt-and-pepper noise. | Can blur sharp edges. |

| Contrast Enhancement (Histogram Equalization) | Distributes pixel intensities more evenly, increasing contrast. | Simple to implement. | Can over-enhance noise. |

| Sharpness Enhancement (Unsharp Masking) | Highlights edges and details by subtracting a blurred version of the image from the original. | Improves image details. | Can amplify noise. |

| Image Resizing | Alters image dimensions to fit specific requirements. | Improves computational efficiency. | Potential for information loss. |

| Image Cropping | Selects a portion of the image. | Focuses on the region of interest. | May exclude important information. |

Feature Extraction and Representation

Feature extraction is a crucial step in computer vision. It involves transforming raw image data into a more compact and informative representation that better captures the essential characteristics of the objects or scenes within the image. This representation is vital for subsequent tasks such as object detection, image segmentation, and classification. Effective feature extraction techniques allow computer vision algorithms to learn patterns and relationships within images, improving their accuracy and efficiency.

Different Feature Extraction Techniques

Various techniques are employed for feature extraction, each with its strengths and weaknesses. These techniques often target specific characteristics of images, such as edges, corners, textures, or color distributions. Understanding these differences is key to selecting the appropriate method for a given computer vision task.

- Edge Detection: Edge detection methods highlight the boundaries between different regions in an image. These boundaries often correspond to significant object features. Popular algorithms include the Sobel operator, the Canny edge detector, and the Prewitt operator. Edge detection is often a preliminary step in object recognition and scene understanding, highlighting the contours of objects.

- Color Histograms: Color histograms represent the distribution of colors within an image. They are useful for identifying objects based on their color characteristics. For example, a histogram of a red car would have a significant peak in the red color range. Color histograms are valuable for object classification and recognition, especially when dealing with objects with distinct colors.

- Texture Features: Texture features describe the spatial arrangement of intensity variations in an image. These features are useful for identifying textures, such as wood grain, brickwork, or fabric patterns. Techniques for extracting texture features include Gabor filters and Local Binary Patterns (LBP).

- Shape Features: Shape features describe the geometric form of objects within an image. These features can be extracted from contours, moments, or other shape descriptors. Examples include the perimeter, area, and aspect ratio of an object.

Examples of Features Used in Object Detection

Object detection systems often employ a combination of features to identify objects. For example, a system detecting cars might use features such as the shape of the car’s body, the color of the car, the presence of wheels, and the spatial arrangement of these features within the image.

Edge Detection Techniques in Computer Vision

Edge detection is a fundamental technique in computer vision for identifying significant changes in image intensity. It helps delineate boundaries and contours of objects, which are essential for object recognition.

Application of Color Histograms in Feature Representation

Color histograms provide a compact representation of the color distribution in an image. They are particularly useful in tasks like object recognition and image retrieval, where the color of an object is a crucial characteristic.

Different Methods for Image Segmentation

Image segmentation aims to partition an image into meaningful regions. Various methods are available, including thresholding, region-growing, and edge-based segmentation. These techniques differ in their underlying principles and the types of features they exploit.

Concept of Feature Vectors

Feature vectors are numerical representations of extracted features. Each element in the vector corresponds to a particular feature. For example, a feature vector for a car might include the car’s color, shape, and position. Feature vectors are crucial for algorithms to process image data effectively.

Table of Feature Extraction Methods and Applications

| Feature Extraction Method | Description | Application |

|---|---|---|

| Edge Detection | Highlights boundaries between regions. | Object recognition, image segmentation |

| Color Histograms | Represents color distribution. | Object classification, image retrieval |

| Texture Features | Describes spatial arrangement of intensity variations. | Material identification, texture recognition |

| Shape Features | Describes geometric form of objects. | Object detection, object recognition |

Object Detection and Recognition

Object detection and recognition are crucial components in computer vision, enabling machines to identify and locate specific objects within an image or video. These technologies are finding widespread application in various fields, from autonomous vehicles to medical image analysis, highlighting their importance in modern technology.

Object Detection Process

Object detection in computer vision involves identifying the presence of objects within an image and precisely localizing their position. This process typically involves several steps, starting with image acquisition and preprocessing, followed by feature extraction and representation. Key steps then include classifying the object and accurately determining its bounding box. Ultimately, the system determines the presence and location of the object within the image.

Object Recognition Algorithms

Various algorithms are employed for object recognition, each with its strengths and weaknesses. Traditional methods often rely on handcrafted features, while modern approaches leverage deep learning. Examples of traditional algorithms include Support Vector Machines (SVMs) and Haar Cascades. Deep learning models, such as Convolutional Neural Networks (CNNs), have achieved significant advancements in accuracy and efficiency. These CNNs, often pre-trained on massive datasets, are now frequently used for object recognition tasks.

Challenges in Object Detection and Recognition

Several challenges impede the accuracy and efficiency of object detection and recognition systems. Variations in lighting conditions, object occlusions, and different viewpoints can significantly affect the performance of these systems. The complexity of real-world scenes with numerous objects and varied poses further complicates the process. The need for large, annotated datasets for training deep learning models also represents a significant challenge.

Bounding Boxes and Non-Maximum Suppression

Bounding boxes are rectangular regions used to delineate the location and extent of detected objects within an image. Non-maximum suppression is a crucial post-processing step used to eliminate redundant or overlapping detections. This process identifies the most confident bounding box for each object and suppresses weaker detections that overlap significantly. This approach ensures that each object is detected only once and with the highest confidence.

Deep Learning in Object Detection

Deep learning, particularly Convolutional Neural Networks (CNNs), has revolutionized object detection. These models automatically learn hierarchical features from vast datasets, enabling accurate object localization and classification. Deep learning models like Faster R-CNN, YOLO (You Only Look Once), and SSD (Single Shot Detector) are widely used for their efficiency and effectiveness in real-time object detection. For instance, YOLO is known for its speed, while Faster R-CNN excels in accuracy.

Improving Object Recognition Accuracy

Several methods can enhance the accuracy of object recognition systems. Using larger and more diverse training datasets helps improve the model’s generalization ability. Data augmentation techniques, such as flipping, rotating, and cropping images, can artificially increase the size and diversity of the training data. Fine-tuning pre-trained models on specific datasets can adapt the models to the particular characteristics of the data being used.

Additionally, employing transfer learning, where knowledge gained from one task is applied to another, can improve the performance of object recognition systems.

Comparison of Object Detection Models

| Model | Speed | Accuracy | Complexity |

|---|---|---|---|

| Faster R-CNN | Moderate | High | Moderate |

| YOLO | High | Moderate | Low |

| SSD | High | Moderate | Low |

This table provides a general comparison of common object detection models. The speed, accuracy, and complexity of each model can vary depending on the specific implementation and the dataset used for training.

Image Classification and Segmentation

Image classification and segmentation are crucial components of computer vision, enabling machines to understand and interpret images. These techniques are fundamental for tasks ranging from autonomous driving to medical diagnosis. Image classification assigns an image to a predefined category, while segmentation precisely delineates the objects within an image, often at a pixel level. This allows for a detailed understanding of the image content, beyond simple categorization.

Image Classification Process

Image classification involves categorizing an image into one or more predefined classes. The process typically begins with acquiring an image and then preprocessing it to enhance the quality of the data. This might include resizing, color adjustments, or noise reduction. Next, relevant features are extracted from the preprocessed image. These features are then used as input to a machine learning algorithm, which learns to map the features to specific classes.

Finally, the algorithm classifies new images based on the learned mapping. A critical aspect is the choice of features and the algorithm used, as these significantly impact the accuracy of the classification.

Image Segmentation Techniques

Various segmentation techniques exist, each with its strengths and weaknesses. These methods can be broadly categorized into thresholding, region-based, and edge-based approaches. Thresholding techniques involve identifying pixels with values above or below a predefined threshold, often used for simple segmentation tasks. Region-based techniques group similar pixels into regions, and then refine these regions based on the characteristics of the image content.

Edge-based techniques focus on identifying boundaries between different objects or regions in an image, using edge detection algorithms to identify these transitions. Each technique has a specific application depending on the complexity of the image and the desired outcome.

Machine Learning in Image Classification

Machine learning plays a pivotal role in image classification. Algorithms like support vector machines (SVMs), decision trees, and naive Bayes classifiers can be employed for image classification tasks. The choice of algorithm depends on factors such as the size and complexity of the dataset, the desired level of accuracy, and the computational resources available. These algorithms learn from labeled image data, building a model that can accurately predict the class of new images.

Comparison of Image Classification Algorithms

Different image classification algorithms exhibit varying performance characteristics. Support Vector Machines (SVMs) are known for their effectiveness in high-dimensional spaces, but can be computationally expensive. Decision trees provide a clear visual representation of the classification process, but may not always generalize well to unseen data. Naive Bayes classifiers are computationally efficient but assume independence among features, which may not hold true in real-world image data.

A table summarizing the key characteristics of these algorithms is presented below:

| Algorithm | Strengths | Weaknesses | Use Cases |

|---|---|---|---|

| Support Vector Machines (SVM) | Effective in high-dimensional spaces, good generalization | Computationally expensive, complex to interpret | Large datasets, high accuracy required |

| Decision Trees | Easy to interpret, clear visual representation | May not generalize well to unseen data, prone to overfitting | Understanding the classification process, smaller datasets |

| Naive Bayes | Computationally efficient | Assumes feature independence, may not perform well with complex data | Simple classification tasks, speed is critical |

Deep Learning in Image Segmentation, Computer vision technology

Deep learning models, particularly convolutional neural networks (CNNs), have revolutionized image segmentation. These models learn hierarchical representations of image features, enabling them to identify intricate patterns and details. Deep learning models excel at handling complex images with intricate details and variations. They can be trained on large datasets to achieve high levels of accuracy in segmentation tasks.

Pixel-Level Accuracy in Image Segmentation

Pixel-level accuracy is crucial in image segmentation because it directly reflects the precision of the segmentation results. Precise delineation of objects at the pixel level is essential in many applications, such as medical image analysis where accurate identification of tissues or organs is vital. Errors at the pixel level can significantly impact downstream tasks and lead to misinterpretations or inaccuracies in subsequent analyses.

For example, in medical imaging, an inaccurate segmentation of a tumor can lead to incorrect treatment decisions.

Deep Learning in Computer Vision

Deep learning has revolutionized computer vision, enabling systems to perform tasks that were previously impossible or extremely challenging. Its ability to automatically learn complex features from vast amounts of data has led to significant advancements in areas like object recognition, image classification, and image segmentation. This approach leverages artificial neural networks with multiple layers, allowing for the extraction of intricate patterns and relationships within images.

Computer vision technology is rapidly evolving, with applications spanning various sectors. Hikvision Digital Technology Co, a major player in the industry, offers a comprehensive overview of their solutions, showcasing how they’re leveraging this technology Hikvision Digital Technology Co A Comprehensive Overview. This advanced technology is key to improving accuracy and efficiency in numerous fields.

Role of Deep Learning in Computer Vision

Deep learning’s crucial role in computer vision stems from its capacity to automatically learn complex visual features. Traditional methods often relied on handcrafted features, which required extensive domain expertise and were limited in their ability to generalize to new data. Deep learning, on the other hand, learns these features directly from data, enabling it to adapt to various image variations and complexities.

This adaptability translates to superior performance in diverse real-world scenarios.

Convolutional Neural Networks (CNNs) in Computer Vision

Convolutional Neural Networks (CNNs) are a cornerstone of deep learning in computer vision. Their architecture is specifically designed to process grid-like data, such as images. CNNs employ convolutional layers, which learn filters to extract local features from images. These filters are then combined to identify increasingly complex patterns, ultimately enabling tasks like object detection and image classification.

The hierarchical nature of CNNs allows for the extraction of increasingly abstract features, from edges and textures to objects and scenes.

Transfer Learning in Computer Vision

Transfer learning is a powerful technique in deep learning that leverages pre-trained models on massive datasets. Instead of training a model from scratch, transfer learning utilizes a pre-trained model, fine-tuning its weights on a smaller, specific dataset. This significantly reduces the training time and resources needed, especially when dealing with limited labeled data. This technique is particularly valuable in scenarios where sufficient labeled data for a specific task is scarce.

Use of Pre-trained Models in Computer Vision Tasks

Pre-trained models, such as ResNet, Inception, and VGG, offer significant advantages in computer vision tasks. These models are trained on large-scale datasets like ImageNet, possessing extensive knowledge of various visual patterns. By leveraging these models, developers can achieve high accuracy and performance on specific tasks with relatively less data. Furthermore, pre-trained models act as strong baselines, enabling the development of custom models and adjustments to specific needs.

Advantages and Disadvantages of Deep Learning Models

Deep learning models in computer vision offer several advantages. Their ability to automatically learn complex features leads to superior performance in various tasks. Moreover, the flexibility of these models enables adaptations to different image variations and complexities. However, deep learning models also have limitations. Their high computational cost can make them computationally intensive, requiring significant resources for training and deployment.

Additionally, the “black box” nature of some models makes it challenging to understand the reasoning behind their decisions. Furthermore, deep learning models often require substantial amounts of data for optimal performance.

Application of Recurrent Neural Networks (RNNs) in Computer Vision

Recurrent Neural Networks (RNNs) can be effectively used for tasks involving sequential data or image sequences. While primarily associated with text processing, RNNs can also handle video analysis or image sequences by treating the images as frames in a sequence. This capability allows for tasks like recognizing actions or understanding image context within a video clip. RNNs can capture temporal dependencies between images, a critical element for analyzing image sequences.

Comparison of Deep Learning Models for Computer Vision

| Model | Strengths | Weaknesses |

|---|---|---|

| Convolutional Neural Networks (CNNs) | Excellent for image-based tasks, learns hierarchical features | Can be computationally expensive for some applications |

| Recurrent Neural Networks (RNNs) | Handles sequential data, good for image sequences or videos | Can be computationally intensive, may struggle with long sequences |

| Transformer Networks | Efficient in handling long-range dependencies, good for complex image understanding | Potentially high computational cost |

The table above provides a concise overview of different deep learning models and their strengths and weaknesses in computer vision applications. Choosing the appropriate model depends on the specific task requirements, available computational resources, and the nature of the data being processed.

Computer vision technology is rapidly evolving, finding applications in various sectors. This advancement is significantly impacting the landscape of Integrated Digital Technologies, as seen in Glendale, CA, for example, as detailed in the Integrated Digital Technologies Glendale CA A Modern Overview. The integration of these technologies, including computer vision, promises exciting possibilities for the future.

Challenges and Future Directions

Computer vision, while rapidly advancing, faces several hurdles that limit its widespread adoption and full potential. Understanding these challenges and potential solutions is crucial for navigating the future trajectory of this technology. The ongoing quest for more robust, accurate, and ethical computer vision systems requires a multifaceted approach, combining technical advancements with careful consideration of societal impact.

Current Challenges in Computer Vision

Computer vision systems often struggle with complex or poorly-lit scenes, where objects are partially occluded or exhibit significant variations in appearance. These challenges lead to inaccurate or unreliable predictions, especially in real-world scenarios. For example, a system trained on images of cars in sunny conditions might perform poorly in foggy weather. Similarly, recognizing a person wearing a hat or sunglasses can be challenging for a system trained on images of faces without these accessories.

The performance of computer vision systems is heavily reliant on the quality and quantity of training data. Insufficient or biased data can lead to skewed results and poor generalization to unseen scenarios.

Limitations of Current Computer Vision Systems

Current computer vision systems exhibit limitations in handling dynamic scenes, including scenarios with rapidly moving objects or unpredictable environmental factors. This limits their effectiveness in applications requiring real-time analysis or continuous monitoring. Furthermore, achieving robust generalization across different environments and conditions remains a significant hurdle. For example, a system trained to detect a specific type of bird in a forest might struggle to identify the same bird in a different habitat or season.

Another significant limitation is the computational cost associated with many computer vision algorithms, particularly deep learning models.

Potential for Advancements in Computer Vision

Advancements in deep learning, particularly the development of more efficient and adaptable architectures, hold significant promise for improving computer vision performance. The use of transfer learning, where models trained on one task are adapted for another, can reduce the need for extensive data for new tasks. For instance, a model trained to identify different types of flowers could be adapted to identify fruits or vegetables with minimal additional training.

Innovations in hardware, like specialized processors and improved cameras, are also contributing to faster and more accurate image processing.

Ethical Considerations in Computer Vision

Ethical considerations play a crucial role in the development and deployment of computer vision systems. Bias in training data can lead to discriminatory outcomes, potentially impacting vulnerable groups. Privacy concerns regarding the collection and use of image data are paramount. Transparency and explainability in the decision-making processes of computer vision systems are essential for building trust and ensuring accountability.

The use of computer vision in surveillance applications, for example, must be carefully regulated to prevent abuse and protect individual liberties.

Potential Future Applications of Computer Vision

Computer vision’s future applications span diverse fields, from healthcare and autonomous vehicles to environmental monitoring and security. In healthcare, computer vision can aid in medical image analysis, enabling earlier disease detection and improved diagnosis. In autonomous vehicles, it plays a crucial role in object recognition, navigation, and accident avoidance. Computer vision can be deployed for environmental monitoring to track deforestation, monitor pollution levels, and assist in conservation efforts.

Furthermore, computer vision can be used in security systems to detect anomalies and identify potential threats.

Emerging Trends in Computer Vision Research

Recent research focuses on enhancing the robustness and adaptability of computer vision systems to real-world scenarios. This includes exploring techniques like adversarial training to improve resilience to manipulated data. Multimodal learning approaches, which combine computer vision with other modalities like sensor data, are gaining traction. This approach aims to create more comprehensive and context-aware systems. Finally, the development of explainable AI (XAI) methods is crucial to ensure the trustworthiness and reliability of computer vision systems in critical applications.

Potential Future Directions in Computer Vision

| Area | Potential Future Direction |

|---|---|

| Robustness | Developing computer vision systems that can handle diverse and challenging conditions, including noise, occlusions, and variations in lighting and viewpoints. |

| Adaptability | Creating computer vision systems capable of learning and adapting to new tasks and environments with minimal retraining. |

| Explainability | Developing methods to understand and interpret the decision-making processes of computer vision models. |

| Privacy | Implementing techniques to protect user privacy during image collection and processing. |

| Multimodality | Combining computer vision with other data sources, such as sensor data, to create more comprehensive and context-aware systems. |

Performance Evaluation Metrics

Evaluating computer vision systems is crucial for understanding their strengths and weaknesses. Different metrics are employed depending on the specific task, such as object detection, image classification, or image segmentation. These metrics provide quantifiable measures of system performance, allowing for comparisons between various models and approaches.

Various Metrics for Evaluating Computer Vision Systems

Computer vision systems are evaluated using a range of metrics tailored to the specific task. These metrics quantify aspects like accuracy, precision, recall, and the degree of overlap between predicted and actual results. Understanding these metrics is essential for assessing the effectiveness of a computer vision system in real-world applications.

- Accuracy: This metric is commonly used for classification tasks, representing the proportion of correctly classified instances out of the total instances. A high accuracy indicates a well-performing model. For example, a system that correctly classifies 95% of images as containing or not containing a specific object has a 95% accuracy.

- Precision and Recall in Object Detection: In object detection, precision and recall measure the system’s ability to accurately locate and identify objects. Precision quantifies the proportion of correctly identified objects among all objects detected by the system. Recall quantifies the proportion of correctly identified objects among all objects present in the dataset. For instance, if a system detects 10 cars and 8 are actually cars, the precision is 80%.

If there are 10 cars in the scene, and the system detects 8, the recall is 80%.

- F1-score: The F1-score is a harmonic mean of precision and recall, providing a balanced measure of both. It’s particularly useful when precision and recall have similar importance. A higher F1-score indicates better performance. For example, in a medical image analysis system, a high F1-score suggests that the system is both good at identifying relevant abnormalities and minimizing false alarms.

Intersection over Union (IoU)

IoU is a crucial metric for evaluating the performance of object detection and segmentation tasks. It measures the overlap between the predicted bounding box or mask and the ground truth bounding box or mask. A higher IoU indicates better alignment between the predicted and actual results.

IoU = (Area of Overlap) / (Area of Union)

For example, if the predicted bounding box for a car has an area of 100 square pixels, the ground truth bounding box has an area of 150 square pixels, and the area of overlap is 80 square pixels, then the IoU is 80/250 = 0.32. A high IoU suggests that the system’s predictions are highly accurate in terms of location.

Image Segmentation Performance

Evaluating image segmentation performance often involves metrics like pixel accuracy, mean intersection over union (mIoU), and class-wise IoU. Pixel accuracy assesses the overall correctness of pixel assignments, while mIoU considers the average overlap across all classes. Class-wise IoU examines the overlap for each specific class.

- Pixel Accuracy: The percentage of correctly classified pixels. A higher pixel accuracy indicates a better overall performance.

- Mean Intersection over Union (mIoU): The average IoU across all classes, providing a measure of the system’s overall performance on different objects. A higher mIoU indicates better performance.

- Class-wise IoU: The IoU for each specific class, highlighting the system’s performance on individual objects or categories.

Interpreting Evaluation Metrics in Computer Vision

Interpreting evaluation metrics involves considering the context of the application and the specific goals of the computer vision system. A high accuracy in a medical image analysis task might be crucial, while in a self-driving car application, precision and recall in object detection might be paramount. The choice of appropriate metrics depends on the specific task and its requirements.

Summary Table of Performance Evaluation Metrics

| Metric | Description | Task Applicability |

|---|---|---|

| Accuracy | Proportion of correctly classified instances | Classification |

| Precision | Proportion of correctly identified objects among detected objects | Object detection |

| Recall | Proportion of correctly identified objects among all objects present | Object detection |

| F1-score | Harmonic mean of precision and recall | Classification, Object detection |

| IoU | Overlap between predicted and ground truth | Object detection, Segmentation |

| mIoU | Average IoU across all classes | Segmentation |

Ethical Considerations in Computer Vision

Computer vision systems, while powerful tools, raise important ethical concerns that must be addressed proactively. The increasing deployment of these systems across various sectors necessitates a careful examination of potential biases, privacy implications, and broader societal impacts. Responsible development and deployment are crucial to ensure these technologies benefit society as a whole.

Potential Biases in Computer Vision Systems

Computer vision algorithms are trained on data, and if this data reflects existing societal biases, the resulting system will likely perpetuate and even amplify these biases. For instance, if a facial recognition system is trained primarily on images of one demographic, it may perform poorly or inaccurately on individuals from other groups. This can lead to unfair or discriminatory outcomes in areas like law enforcement, loan applications, or even hiring processes.

Addressing these biases requires careful data selection, algorithm design, and ongoing monitoring.

Impact of Computer Vision on Privacy

Computer vision systems often collect and process vast amounts of personal data. This data may include images, videos, and even inferred information about individuals. The potential for misuse of this data raises serious privacy concerns. Ensuring data security, obtaining informed consent, and establishing clear guidelines for data usage are critical to mitigate privacy risks. For example, facial recognition in public spaces must be carefully considered alongside individuals’ rights to privacy.

Ethical Implications of Computer Vision in Surveillance

The use of computer vision in surveillance systems raises significant ethical concerns, especially regarding potential abuses of power and the erosion of individual freedoms. The potential for mass surveillance, the lack of transparency in decision-making processes, and the potential for misidentification all warrant careful consideration. Surveillance systems must be designed with strict guidelines for data collection, storage, and usage to prevent misuse.

A focus on minimizing the scope of surveillance and prioritizing due process are essential.

Importance of Fairness and Accountability in Computer Vision

Fairness and accountability are paramount in computer vision systems. These systems should treat all individuals equitably, regardless of race, gender, or other characteristics. Systems should be transparent and explainable, allowing users to understand how decisions are made. This transparency fosters trust and accountability, crucial in applications such as law enforcement and judicial processes. For example, a loan application system should not discriminate against a specific demographic based on biased data.

Examples of Responsible Use of Computer Vision

Computer vision has the potential for positive societal impact. For instance, in healthcare, it can assist in early disease detection and diagnosis. In agriculture, it can optimize crop yields. In autonomous vehicles, it enables safe navigation. These examples showcase how computer vision can be used for beneficial purposes.

Guidelines for Developing Ethical Computer Vision Systems

Developing ethical computer vision systems requires a multi-faceted approach. Data used for training should be diverse and representative of the population. Algorithms should be designed to mitigate biases and ensure fairness. Clear guidelines for data collection, storage, and usage should be established and enforced. Regular audits and assessments should be conducted to monitor system performance and identify potential issues.

The systems must be transparent and accountable.

Table of Ethical Considerations in Various Computer Vision Applications

| Application | Potential Bias | Privacy Impact | Surveillance Concerns | Fairness and Accountability |

|---|---|---|---|---|

| Facial Recognition in Law Enforcement | Misidentification of individuals from underrepresented groups | Potential for misuse of facial data | Concerns about mass surveillance and lack of transparency | Ensuring equitable treatment of all individuals |

| Self-Driving Cars | Bias in object detection | Data collection and use from vehicles | Potential for misuse of data in accidents | Ensuring safe and fair operation for all users |

| Medical Image Analysis | Bias in image interpretation based on demographics | Patient privacy regarding medical data | Limited surveillance in healthcare settings | Fair and accurate diagnosis for all patients |

| Retail Analytics | Bias in customer profiling | Collection and use of customer data | Limited surveillance in retail environments | Ensuring equitable pricing and customer experience |

Final Wrap-Up

In conclusion, computer vision technology offers a fascinating blend of technological advancement and real-world applications. While significant progress has been made, challenges remain in areas like accuracy, ethical implications, and computational resources. The future of computer vision promises exciting developments, and continued research and innovation are crucial for unlocking its full potential.

Q&A

What are some common applications of computer vision in healthcare?

Computer vision is used in healthcare for tasks like automated disease detection in medical images (X-rays, MRIs), assisting in surgical procedures, and improving patient monitoring.

What are the limitations of current computer vision systems?

Current systems can struggle with complex scenes, variations in lighting and image quality, and subtle or occluded objects. They also may exhibit biases if trained on skewed data sets.

How can image preprocessing improve computer vision accuracy?

Image preprocessing techniques like noise reduction, contrast enhancement, and resizing can significantly reduce errors and improve the accuracy of subsequent analysis steps.

What are the ethical considerations associated with using computer vision in surveillance?

Privacy concerns, potential for bias, and the potential for misuse are key ethical considerations when deploying computer vision in surveillance settings.